Deepfake Technology and Account Takeovers: A New Era of Fraud

In the past few years, the emergence of the deepfake technology has caused great concern in the various industries – ranging from politics to cybersecurity. Previously, the novelty of synthetic media seemed to be limited to viral videos and entertainment but has become a serious instrument of deception. One of the most terrifying trends is the application of the deepfakes in account takeover (ATO) fraud, where the attackers break into the users’ accounts, pretending to be recognized people. With the advancement of artificial intelligence, the threat landscape is also becoming more advanced.

What Are Deepfakes?

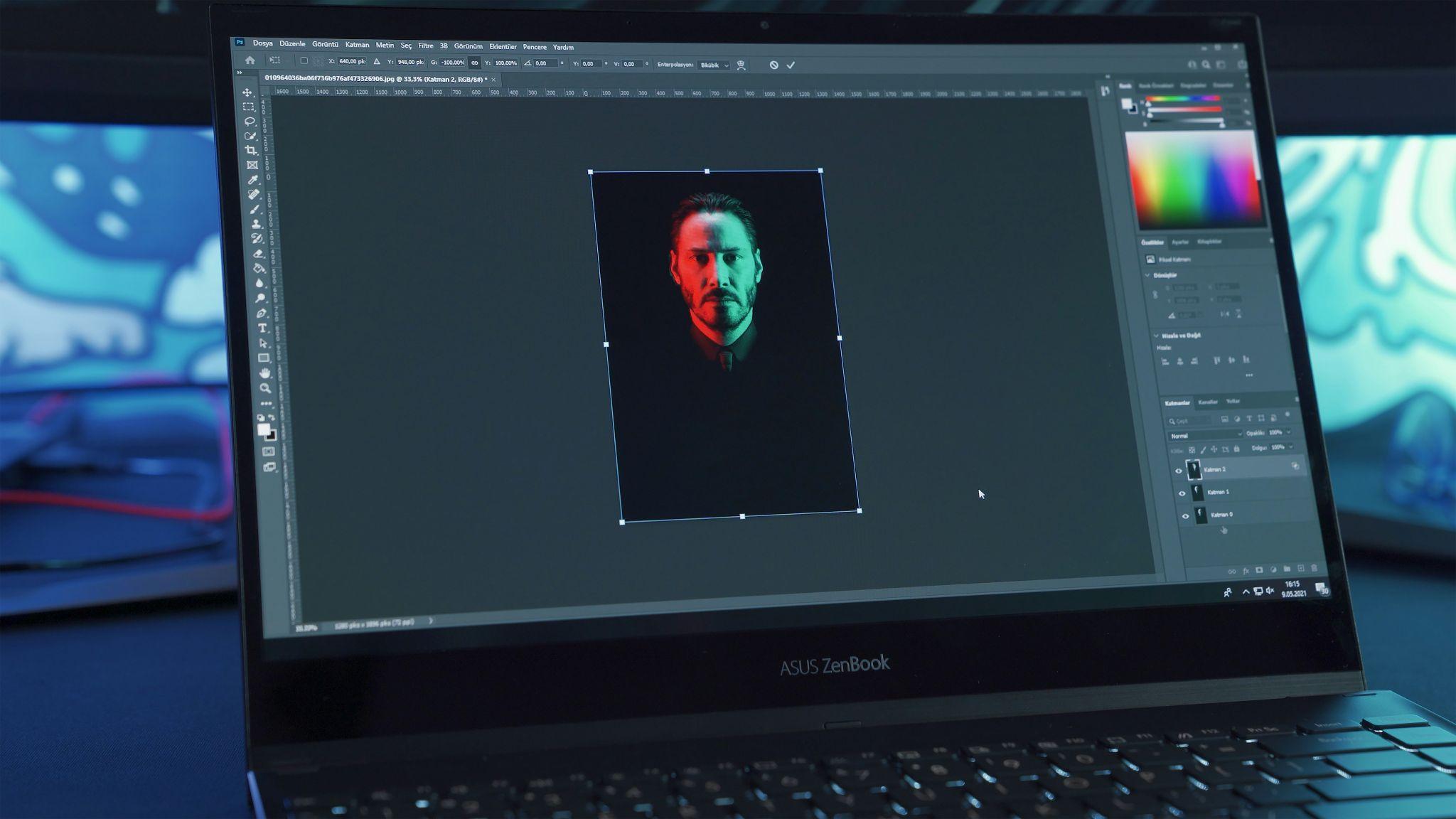

Deepfakes are an artificial intelligence generated synthetic media that use such methods as deep learning or generative adversarial networks (GANs). Such tools can realistically replicate a person’s face, voice, and mannerisms, in most cases in real-time. Deepfakes initially were a gimmick for the manipulation of video materials, but now they have been militarized for various malicious tasks – from blackmailing and misinformation to corporate fraud.

The Nature of Account Takeover Fraud

Account takeover fraud is a situation whereby cyber criminals compromise the online account of another individual (banking, email, social media or enterprise software). In the traditional sense, attackers would use phishing or credential stuffing or brute-force attacks to get in. Once they are in, they can steal data, make transfers, or conduct yet more attacks disguised as the victim.

However, today with capabilities of deepfake, fraudsters can evade newer defenses especially the biometric verification systems which were previously believed to be effective barriers to impersonation.

How Deepfakes are driving account takeovers

The combination of deepfakes and the ATO fraud is creating a dangerous fraud frontier. Here’s how deepfakes account takeover frauds typically unfolds:

Voice Cloning in Customer Service Frauds: Call centers or financial institutions that use voice authentication for security purposes are at risk of deepfake audio. Fraudsters can create a synthetic version of a victim’s voice based on samples (e.g., clips from the social media) and use it to pass security checks.

Video Deepfakes for KYC Bypass: Know Your Customer (KYC) practices frequently make use of the users’ live video or facial recognition to confirm identity. Deepfake videos can be used to spoof these procedures, particularly on lousy platforms for liveness detection.

Business Email Compromise (BEC) 2.0: One of the emerging security threats is the use of deepfaked videos or voice calls to impersonate executives and asking employees to do emergency wire transfers or share confidential data.

This new wave of fraud is much more credible as compared to the age-old impersonation attempts. Given the fact that many systems currently use biometric authentication and real-time identity verification, deepfakes become more and more effective in bypassing them.

Real-World Incidents

Several high-profile occurrences point to the increasing threat:

In 2020, a company executive was imitated with the help of AI-generated voice, and an employee was tricked into transferring $200,000 to a fraudulent account.

In 2023, a UK-based financial firm discovered an attempt to bypass KYC through a deepfake video imitating a real customer when onboarding. While the attempt was unsuccessful because of better liveness detection, it disclosed a new dimension of fraud.

These examples show that attackers are not only competent in terms of technology but also learning fast about defensive countermeasures.

The Regulatory and Business Response

Regulators and firms are beginning to realize the danger. The identity verification protocols of the financial institutions, fintechs, and social media platforms are being re-evaluated. Some key responses include:

Enhanced Liveness Detection: Solutions that can monitor micro-movements and blood flow and other minor indications of human presence are now in the process of being integrated into the KYC systems.

Multi-Factor Biometric Checks: The combination of facial recognition with others such as behavioral biometrics or voice cadence analysis can help lessen the dependency on any particular means of authentication.

AI for Deepfake Detection: Companies are resorting to AI-powered deepfake detection tools to find anomalies that could indicate video or audio has been artificially manipulated. Such include pixel distortion patterns and audio inconsistencies.

Regulatory bodies, particularly in Europe and North America, are also now beginning to release guidance on responsible use and detection of synthetic media.

Preparing for the Future

Proactive defense is critical for organizations, and for finance, insurance, and tech companies in particular.

Educate Teams: Employees, particularly, those in customer service and finance positions should be taught to detect the signs of deepfake fraud.

Audit Existing Security Measures: If your systems are based on biometrics only, then perhaps it’s time to diversify.

Invest in AI Countermeasures: It is a necessity to fight AI with AI. Adding real-time deepfake detection can be a critical barrier of defense.

Collaborate Across Industries: Fraudsters move around from one platform to another and from one country to another. A joint effort and cross-industry collaboration will be important in combating synthetic identity fraud.

Final Thoughts

Deepfake technology, which was previously a futuristic novelty, is now an existing cybersecurity risk. Its incorporation into account takeover fraud is a turning point in the development of digital crime. In the face of increasingly sophisticated fraudsters, businesses and institutions have to meet the challenge at the same speed. The only thing that can fight AI-driven lies is AI-led security – agile, flexible, and founded on alertness, invention, and stamina.